Instance optimality

What does it mean that an algorithm is the best one for a given task? The usual approach is to compute the time complexity of the algorithm as a function of \(n\), always considering the worst possible \(n\)-bit input. Instance optimality is about getting an algorithm that is single-handedly the best one for all inputs at once.

Universally-optimal Dijkstra

Dijkstra’s algorithm is one of the oldest and most important algorithms. We use it to solve the shortest path problem for which it is not necessarily the best algorithm, but we know that it is the best possible algorithm for the problem of ordering the nodes by their distance from the root, provided it uses a sufficiently efficient heap.

In a paper with Bernhard Haeupler, Richard Hladík, Robert Tarjan, and Jakub Tětek, we showed that if the heap is even more efficient (has the so-called working-set property), Dijkstra’s algorithm is even universally-optimal for this problem.

Universal optimality is a property that lies between instance optimality and worst-case; it basically says that no matter how the input graph looks like, our algorithm adapts to it ideally (but we are worst-case with respect to the weights on it).

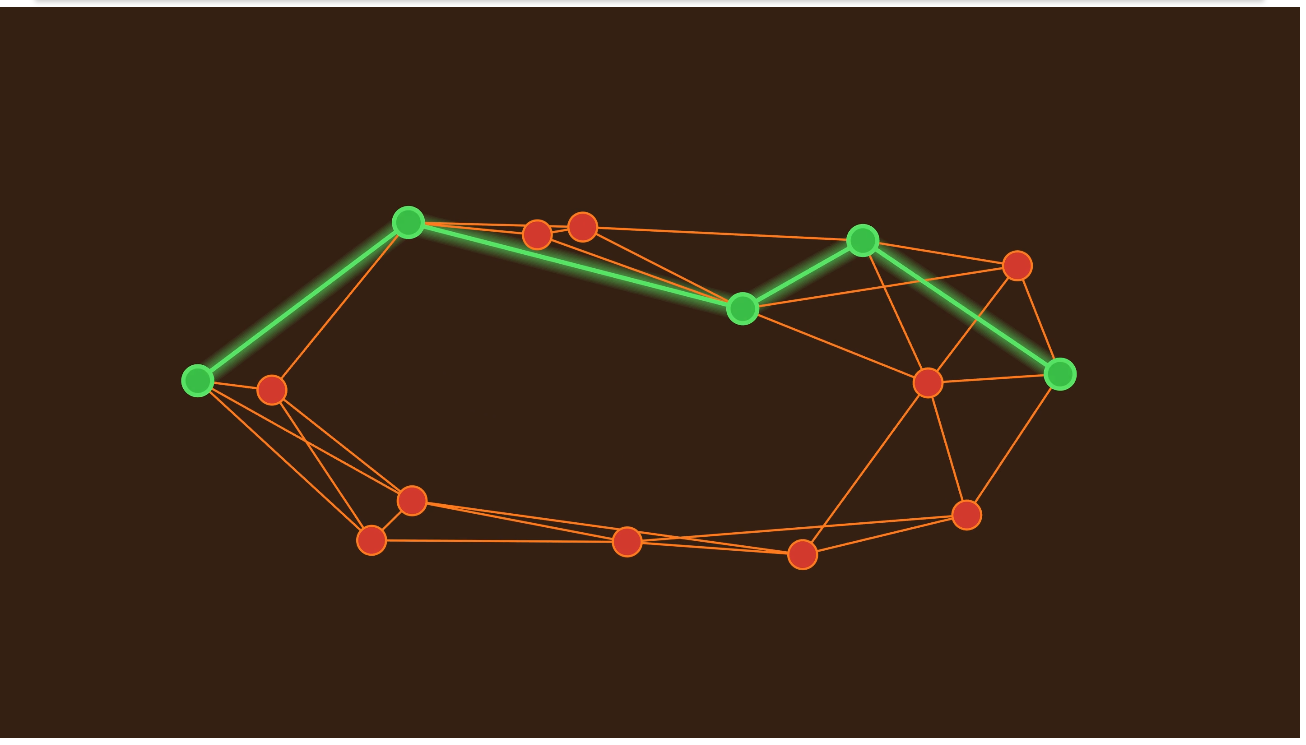

A picture from Quanta magazine.

A picture from Quanta magazine.

Sampling-based algorithms

Here’s a fundamental problem in statistics: There is some unknown distribution \(D\) supported on \([0, 1]\) and we want to estimate its mean – let’s say that our output should be correct up to additive \(\pm \epsilon\) error with 99% probability. The standard solution is to sample \(O(1/\epsilon^2)\) samples and return their mean; this is the best possible complexity in the worst-case sense. <!– Here it is:

- \(T_1 \leftarrow 1/\epsilon, \; x_1, \dots, x_{T_1} \leftarrow\) sample from input

- \[\hat{\mu} \leftarrow \frac{1}{T_1} \sum_{i = 1}^{T_1} x_i, \; \hat{\sigma}^2 \leftarrow \frac{1}{T_1-1} \sum_{i = 1}^{T_1} (\hat{\mu} - x_i)^2\]

- \[T_2 \leftarrow O(1/\epsilon + \hat{\sigma}^2/\epsilon^2)\]

- Sample additional \(T_2\) samples and return their mean –>

However, it turns out there is also an instance-optimal solution! Basically, you can first sample just a few samples and guess the variance of the distribution; this allows you to potentially lower the amount of samples you then need for the final estimate. We analyzed the instance-optimal estimation of the mean, median and some other problems in a paper with Shyam Narayanan, Jakub Tětek, and Mikkel Thorup, and later found out that the mean case was already analyzed in an earlier paper.

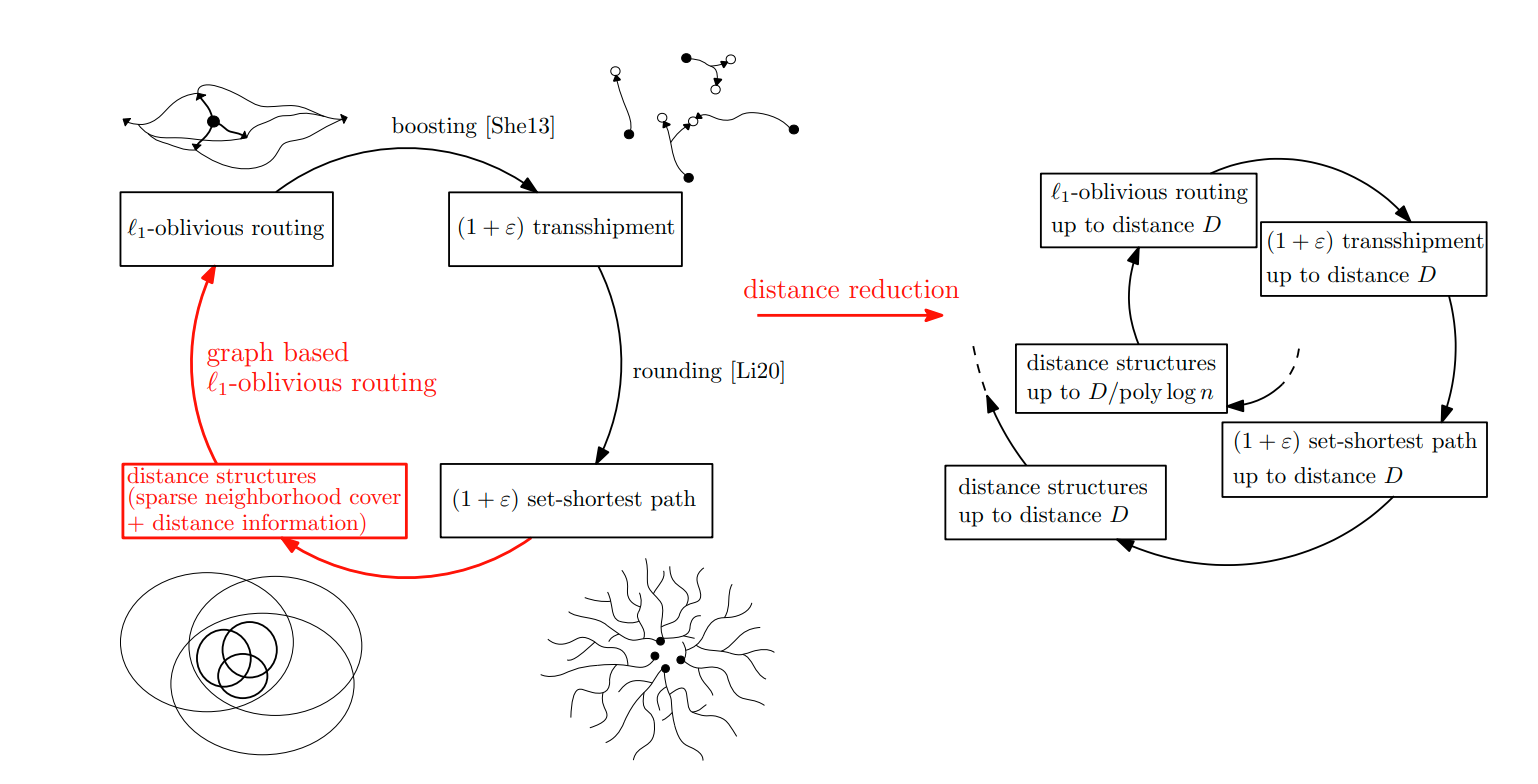

Distributed algorithms

In a series of amazing papers, Bernhard Haeupler and many others developed the technology for designing approximately-universally-optimal algorithms for distributed algorithms. In two papers with Christoph Grunau, Bernhard Haeupler, Michael Elkin, Jason Li, and Goran Zuzic, we showed how to design universally-optimal algorithms for a bunch of problems, including computing approximate shortest paths.